Create eksctl YAML Specification

Refer to the following content to create a YAML specification for the EKS cluster and save it in an appropriate location.

Create EKS Cluster and Node Group

Run the following command to create the EKS cluster.

Nginx Ingress Controller

Install the Nginx Ingress Controller using Helm.

Cert Manager

Install Cert Manager using Helm.

Storage

AWS provides various storage options, and you can choose between Amazon Elastic File System (EFS) and Elastic Block Store (EBS) as the default storage for ale based on your use case.

Install AWS EBS CSI Driver

Install AWS EBS CSI Driver

1. Check if the cluster IAM OIDC provider exists. If not, create a new one.2. Create an IAM role for the EBS CSI driver.3. Apply the EBS CSI driver as an EKS add-on.4. Disable the default setting for gp2.5. Create a new Storage Class (gp3). It will be set as the default using the annotation 6. Test the PVC creation by running the following command.7. Check the status of the created PVC. If created successfully, the STATUS should be Bound.

storageclass.kubernetes.io/is-default-class: "true".Install AWS EFS CSI Driver

Install AWS EFS CSI Driver

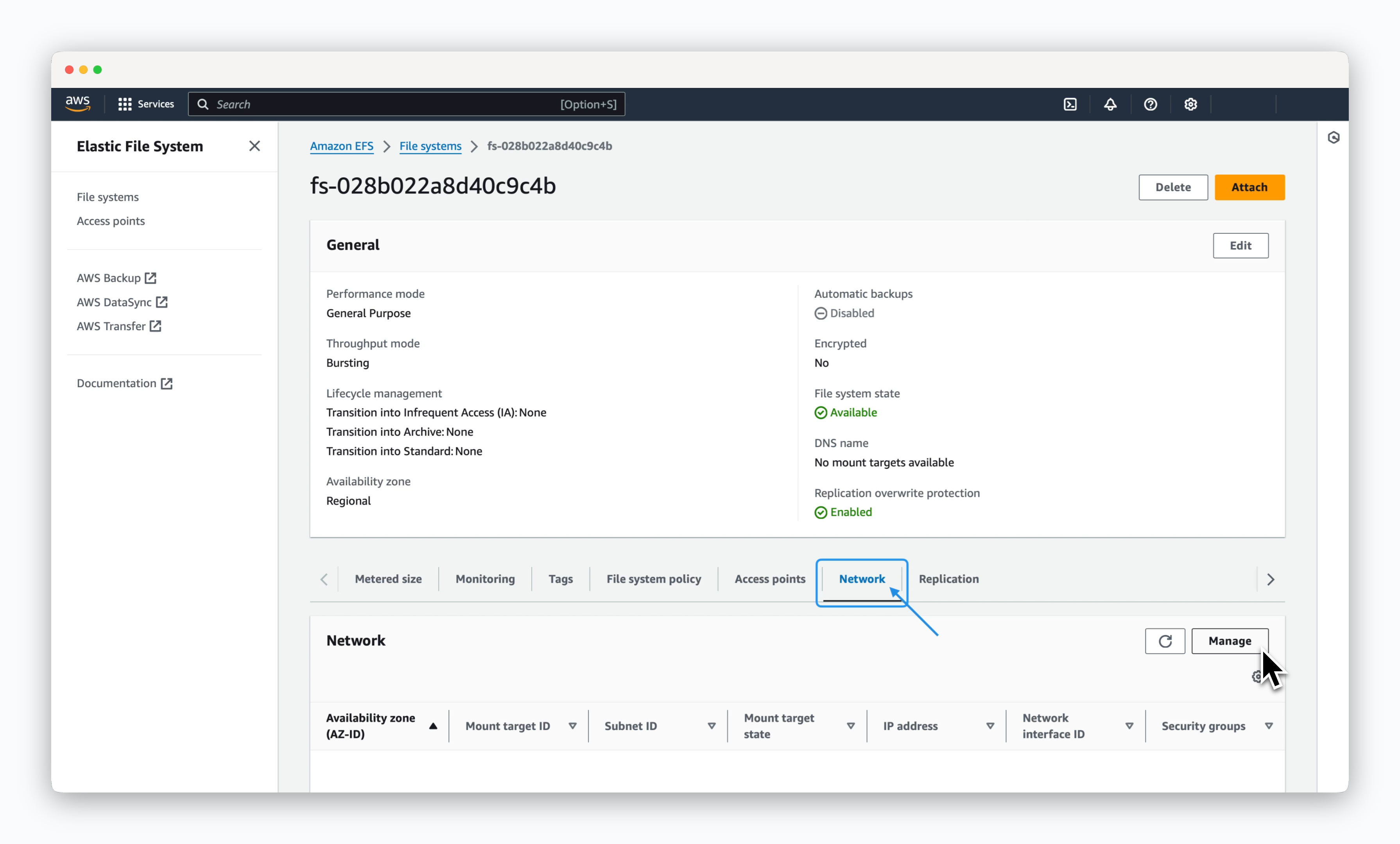

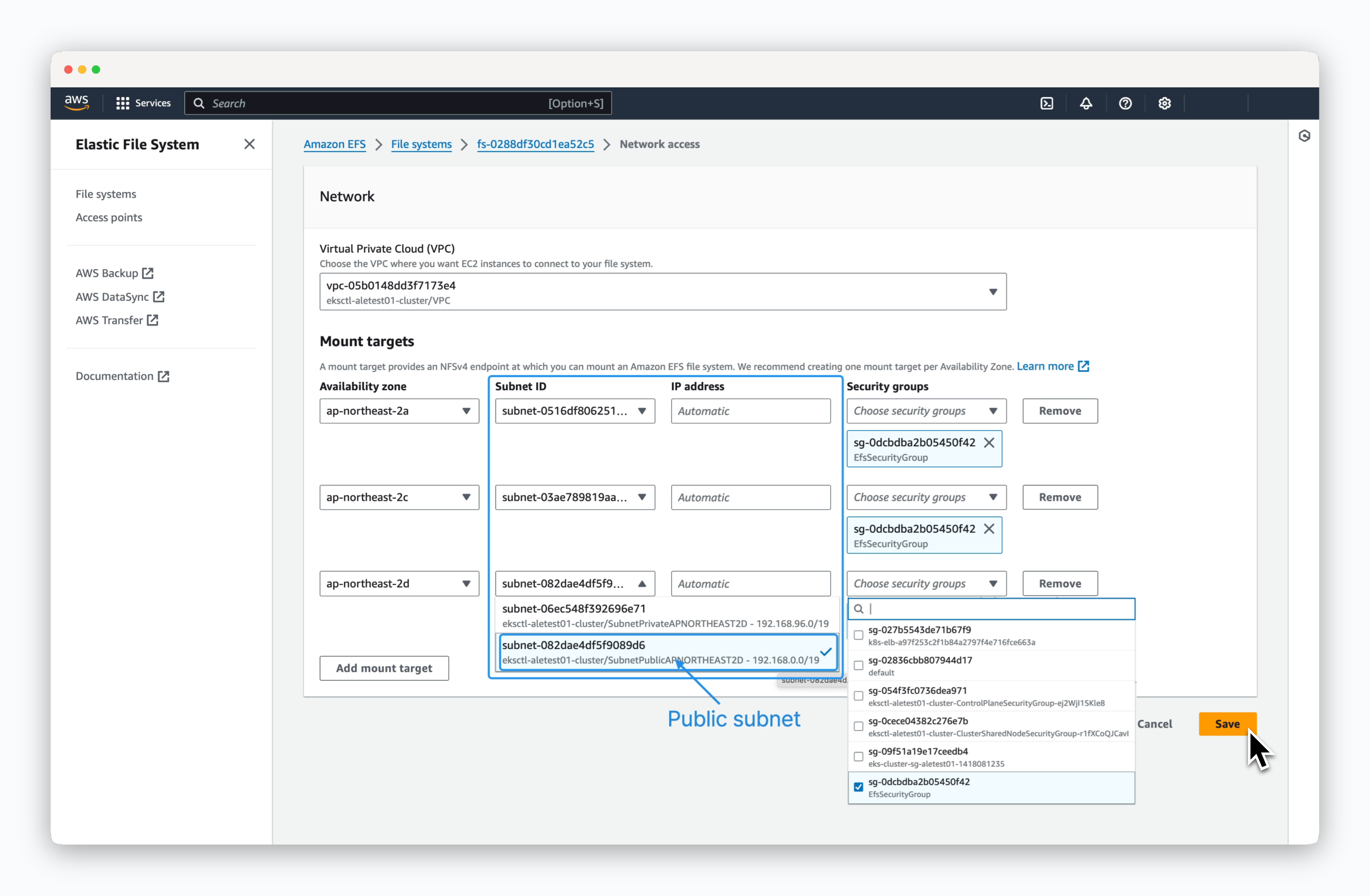

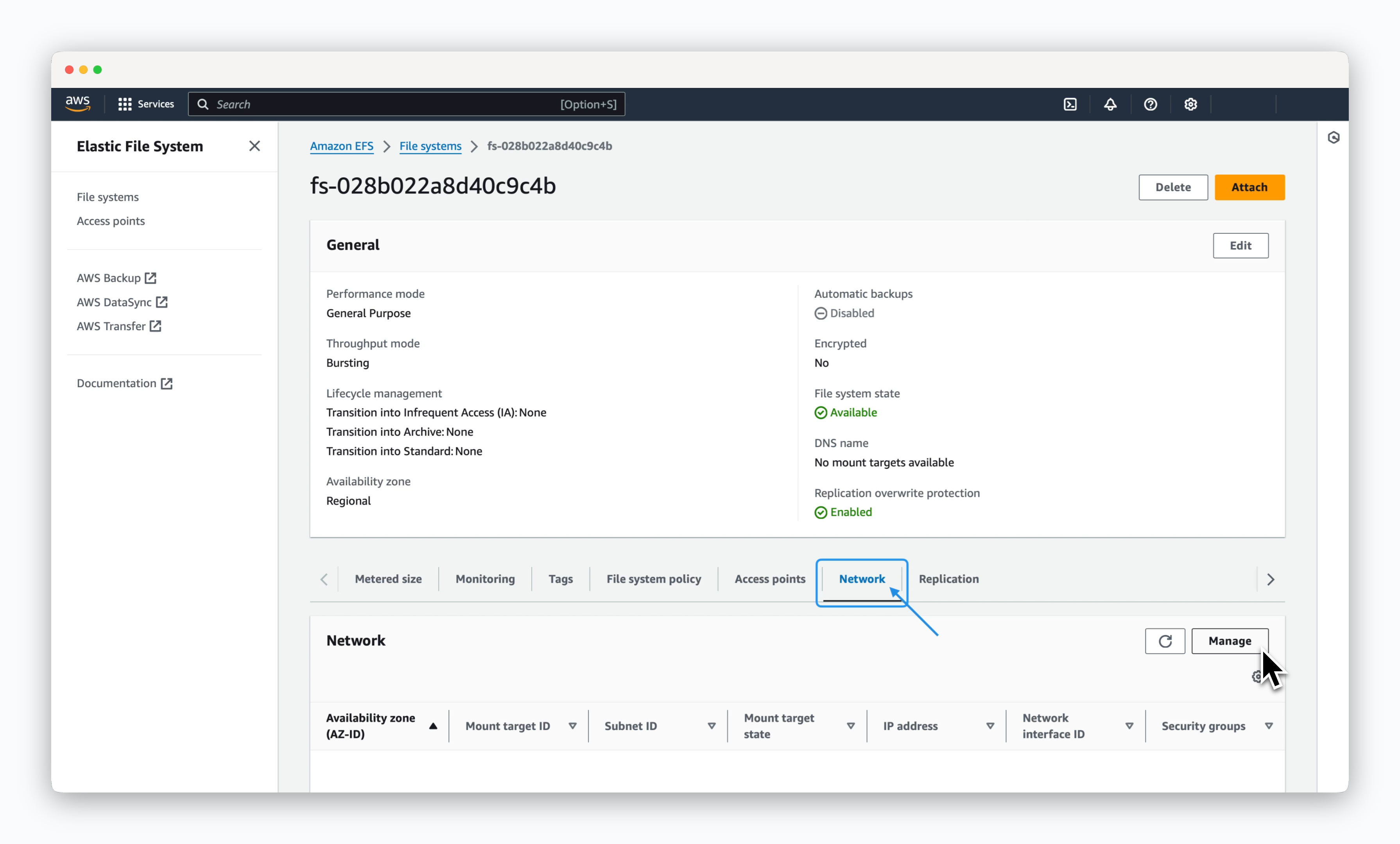

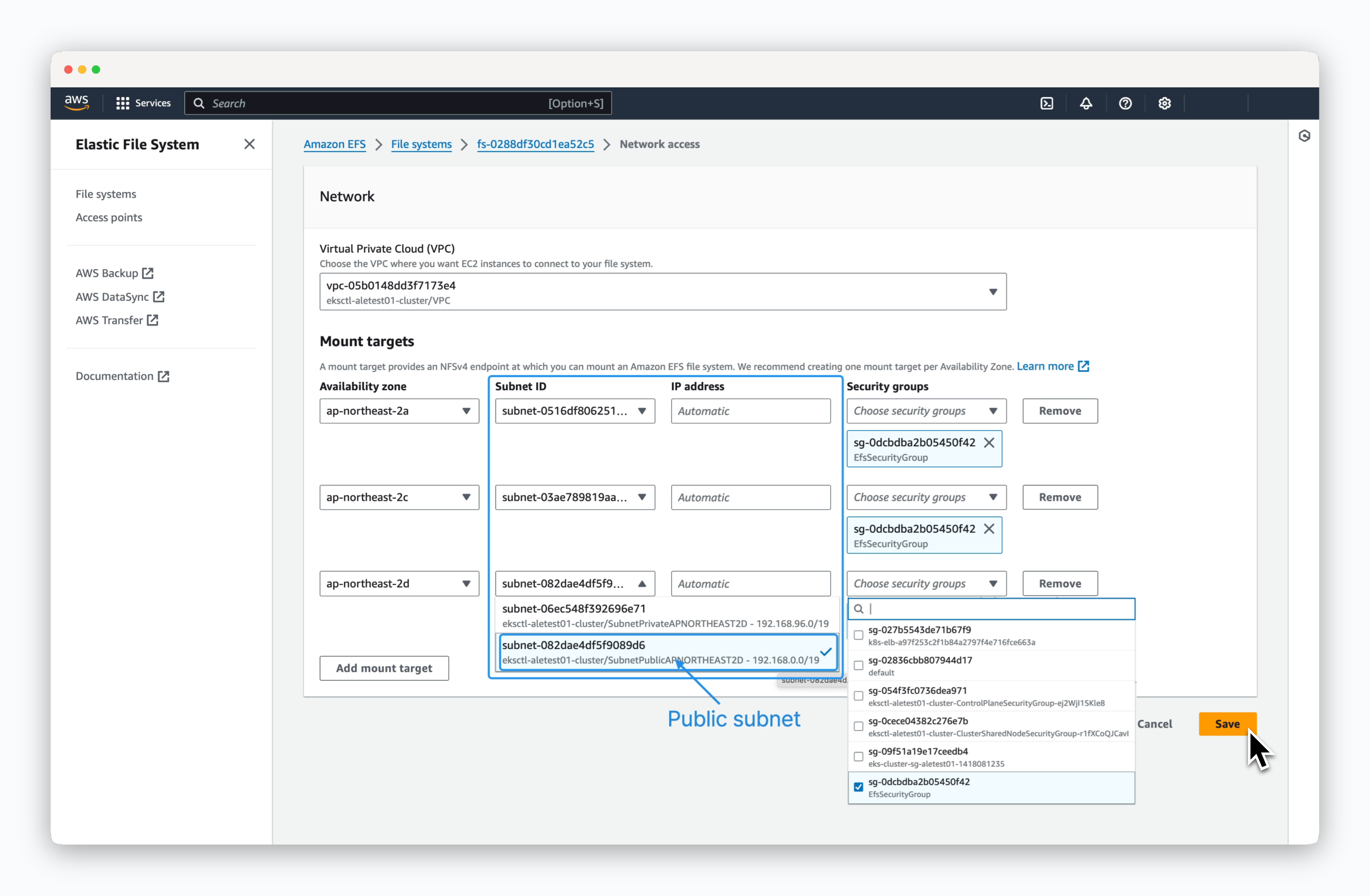

1. Check if the cluster IAM OIDC provider exists. If not, create a new one.2. Create an IAM role for the EFS CSI driver and attach the required policy.3. Apply the EFS CSI driver as an EKS add-on.4. Retrieve information for creating an EFS file system and set it as environment variables.5. Create a security group and add an ingress rule to allow communication on port 2049 (NFS).6. Create an AWS EFS file system.7. Access the created file system page in the AWS EFS management console, navigate to the Network tab, and click the Manage button. 8. Add mount targets based on the Availability Zones (AZ) where the Kubernetes cluster nodes are located. Select the public subnet for each node and choose the EFS security group with the inbound rule allowing port 2049. Then, save the configuration.

8. Add mount targets based on the Availability Zones (AZ) where the Kubernetes cluster nodes are located. Select the public subnet for each node and choose the EFS security group with the inbound rule allowing port 2049. Then, save the configuration. 9. Create a new Storage Class (efs-sc). It will be set as the default using the annotation 10. Test the PVC creation by running the following command.11. Check the status of the created PVC. If created successfully, the STATUS should be Bound.

9. Create a new Storage Class (efs-sc). It will be set as the default using the annotation 10. Test the PVC creation by running the following command.11. Check the status of the created PVC. If created successfully, the STATUS should be Bound.

storageclass.kubernetes.io/is-default-class: "true".Kubernetes Metrics Server

Install the Metrics Server to collect container resource information within the cluster.Run the following commands to install the Kubernetes Metrics Server.